With this use case, let’s discover how it’s possible to influence buffering on the last mile of delivery. We’ll share our customer’s impressive metrics with you. But first, a piece of basics.

Where does the buffering come from?

The main cause for buffering — a slowdown in the download process. When the next chunk isn’t yet available for replay, there’s no choice other than to lower bitrate or (when this doesn’t work out) to freeze the playback until the buffer fills again. So it’s a bandwidth issue in the first place, but is this really the norm?

For decades it was a "game" with just two sides: the downloader (the viewer) and the uploader (the CDN). The effective data transmission rate couldn’t be higher than the lowest bandwidth available in one of these two points. And it seems like no matter where exactly things go wrong, on a CDN side or on a viewer’s side, if we can’t somehow eliminate the bottleneck, the buffering remains.

Distributed CDN means multiple traffic sources

Everything changes when a video player gets multiple connections to a distributed cloud of devices and maintains them during the entire session. Of course, some devices could go offline (just as some CDN edges could become unreachable), but if a cloud is big enough, every viewer has many active nodes to download traffic from.

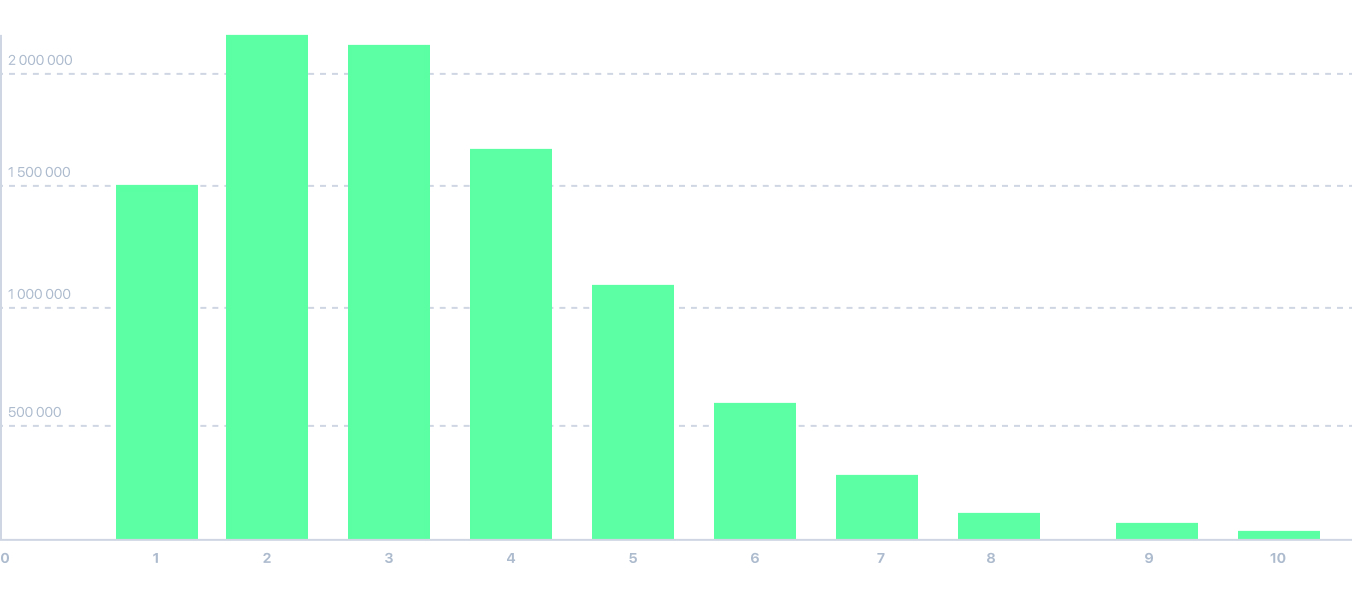

Active traffic sources

This leads to a download speed increase

In usual circumstances, it would inevitably lead to bitrate downscale, and then to buffering at some point. But having many active channels actually fixes that problem! Statistically, we see that viewers' devices are able to download the video several times faster from the other devices in a distributed cloud than from the CDN!

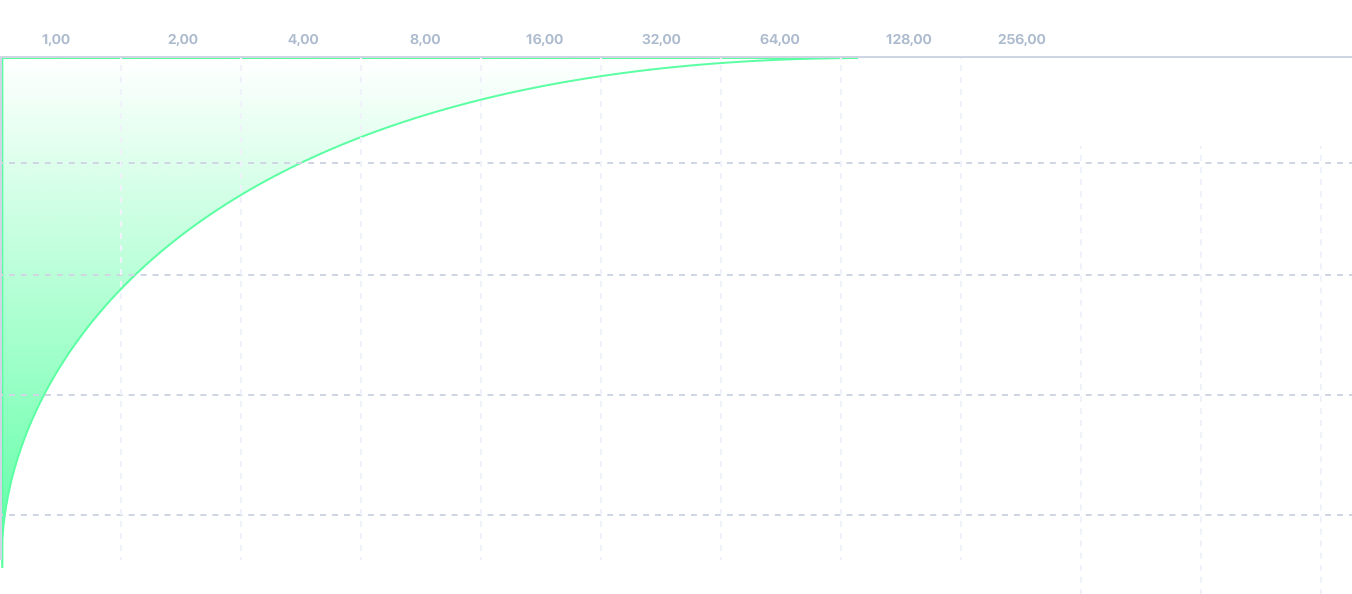

P2P bandwidth to CDN bandwidth ratio

This results in buffering rate decrease

Do you remember — faster the download, lower the chances of buffering? It’s never zero simply because some devices are in such extreme conditions that even a few traffic sources are unable to deliver video immediately. However, statistically, we’ve got that P2P CDN has a positive effect on buffering.

Buffering rate during the day

If a viewer has a set of active channels to download from, the chances of buffering fall 50%!

Being on the last mile of the supply chain, we believe the critical thing for keeping QoE at the highest level is the ability to detect video download problems and the ability to change the way a particular device is downloading the file in due time. When every single video player has multiple live connections with multiple distributed sources, there is always a lightning-fast way to switch from a bad source to a good one and avoid the download slowdown which usually causes buffering.

Andrei Klimenko,

CEO Teleport Media

Keen to learn more in detail? We’ll share more insights with you!

Contact us today to start your free trial with unlimited traffic.