For this exact reason Teleport Media is using the architectural approach when developing new ways of how the p2p network is finetuned for a particular type of streaming: online linear TV, sports events, any live events, catch up or VOD content.

One of the latest global sports events Teleport Media P2P network worked on was Euro 2020. And so to study and improve the video delivery performance of the P2P network, we compared the effectiveness in two different stacks (DASH and HLS) of two web broadcasters that had streamed Euro 2020 games from the 1/8 to the finale in a different quality — 720h and 1080h respectively.

Euro 2020 Stats in Teleport Media P2P network

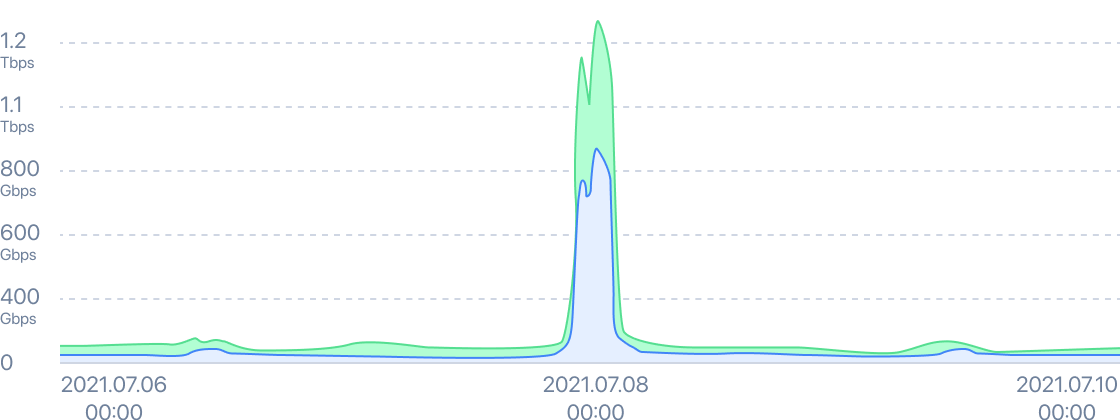

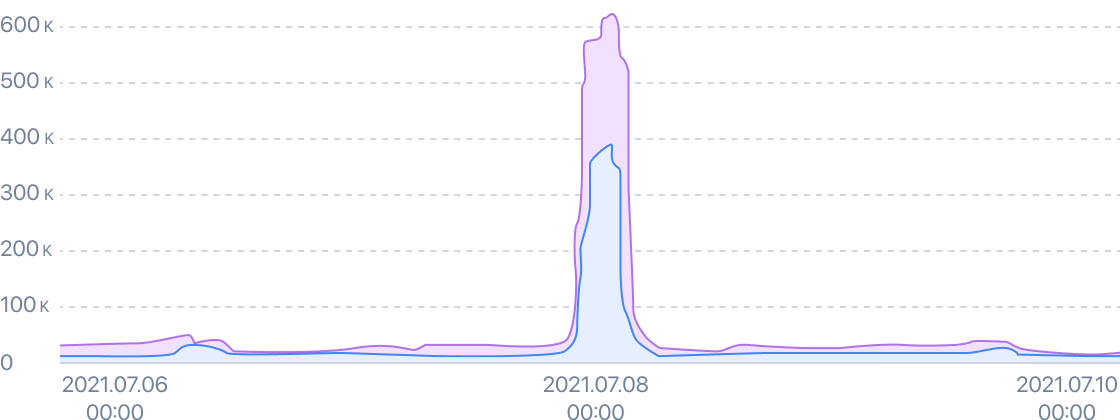

To come up straight to the point, our two clients streamed more than a dozen playoff games each at Euro 2020. The number of simultaneous viewers at the peak was up to 470,000, with the peer-to-peer efficiency reaching a maximum of 70%. Meaning, we delivered 70% of all traffic instead of traditional CDN. The total number of unique viewers who watched the game (partially or fully) reached 1 million for the games of 1/8, ¼, and ½, and for the final game, it was 1.26 million.

Bandwidth

Total users connected

In this case, a broadcaster doesn’t have to urgently add expensive servers in different locations or contribute to the development of the internal infrastructure. This architectural approach is a software-based flexible and scalable solution that does not require new investments. More to say, it’s free. It’s Teleport Media R&D and its results aimed to help us do the job (video delivery) better.

Now, let’s see what happened at the Euro 2020 games and what we decided to do about the P2P settings.

P2P in DASH vs P2P in HLS

So, on every live football game, we tracked a variety of video delivery metrics of Teleport Media’s P2P network. And when we compared the overall performance of the P2P network in two different stacks (DASH and HLS), we did two main conclusions:

- With DASH in HD quality, almost 80% of P2P network connections were successful, because the size of a video chunk was 2,5 Mb and the buffer was 5−6 seconds, this was quite enough for fast uploads and good results in P2P.

- The percentage of successful connections and downloads in Full HD on HLS was only half of DASH levels. The size of the chunk was 6 Mb and the buffer was 8 sec, so it appeared that the player had limited time to download the required video chunk. The request went to the default CDN, resulting in increased download time and slowed buffer refill.

The buffer itself looks like a certain container that receives video chunks of different sizes from different sources, stores them for a while, and gives it into a video player systematically. The task of the video delivery infrastructure is to keep the buffer always filled to a certain value, for example, 30 seconds. Our task is to fill the buffer by downloading from the P2P network, maintaining and increasing P2P download speed with fine-tuning, and increasing P2P efficiency in general. Meaning, to deliver most traffic in P2P.

We realized that the settings for much "heavier" Full HD must be changed so that the buffer filling from P2P would not be slowed down, and the expected benefits of the P2P delivery would work.

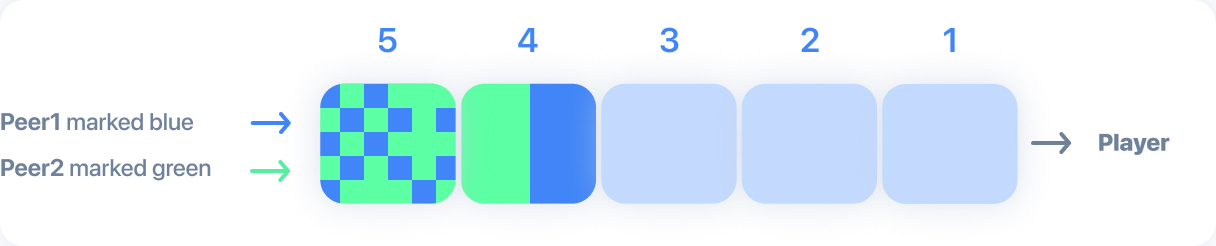

The Race Download

The data analysis shows that when video chunks are small (less or equal 720h), downloads are fast enough that no additional settings are needed. If we are dealing with video chunks in high resolution (equal or higher 1080h), those chunks weigh a lot, but the length of the chunk remains the same, therefore the time needed to download it remains the same, then the download speed should increase several times, so the player’s buffer is filled smoothly. However, every peer has not changed its CDN provider, the same as the CDN provider has not changed servers. So what should happen?

For example, from our last customer case that showed How to transform the quantity into quality and decrease buffering 50%, we can see that if a viewer’s device has several sources for downloading chunks from and the ability to switch between them, this device has an average download speed higher (by 2, 4 and even 10 times) than the download speed from the default CDN as the only one source. Can the situation be further improved? Yes, if we do not download the whole video chunk from one peer, but break this chunk into some parts, turning a 6-Mb chunk into 3 small pieces of 2-Mb, and start a parallel downloading, at the same time defining the fastest peer. If the download is approximately equal in speed, it remains parallel; if one of the peers is much faster, it takes the work remaining from the rest of peers, and so the Race Download feature is realized.

Teleport Media determines the nearest and the most reliable peers to establish such connections. This will help to significantly increase the speed of downloading in peer-to-peer chunks of high resolution — in Full HD quality and then 4K. Tests are now underway and soon we will launch the Race Download feature for our customers. You can also be among the first to try how it works — sign up for the Teleport Media dashboard and stay tuned!

Daily more than 2 million devices get TV and on-demand traffic using Teleport Media technology. Streaming optimization tools through an architectural approach are needed to ensure that the video is delivered in the best quality, even if they’re all watching the same content at the same time.

We at Teleport Media strive to make P2P video streaming effective for any client, and our goal is to find new architectural solutions for existing decentralized delivery and systematically improve it.

Follow this story on Teleport Media website and our Medium blog to stay up-to-date with how we help OTT streaming services to deliver video at scale, and make any video player never get buffered!